Data Lakes, including technologies such as Amazon S3, Azure Blob Storage, and Google Cloud Storage, have emerged as versatile platforms for storing diverse data types. They facilitate broad-spectrum analytics and serve as critical resources in our data-centric era. Dataddo assists in unlocking the full potential of these platforms by providing efficient data delivery to these and many other data storage technologies

Understanding your Data

Before diving into the specifics of data configuration, it's vital to have a solid understanding of your data. This means recognizing its unique properties, how it changes over time, and how these changes should be reflected in your analysis.

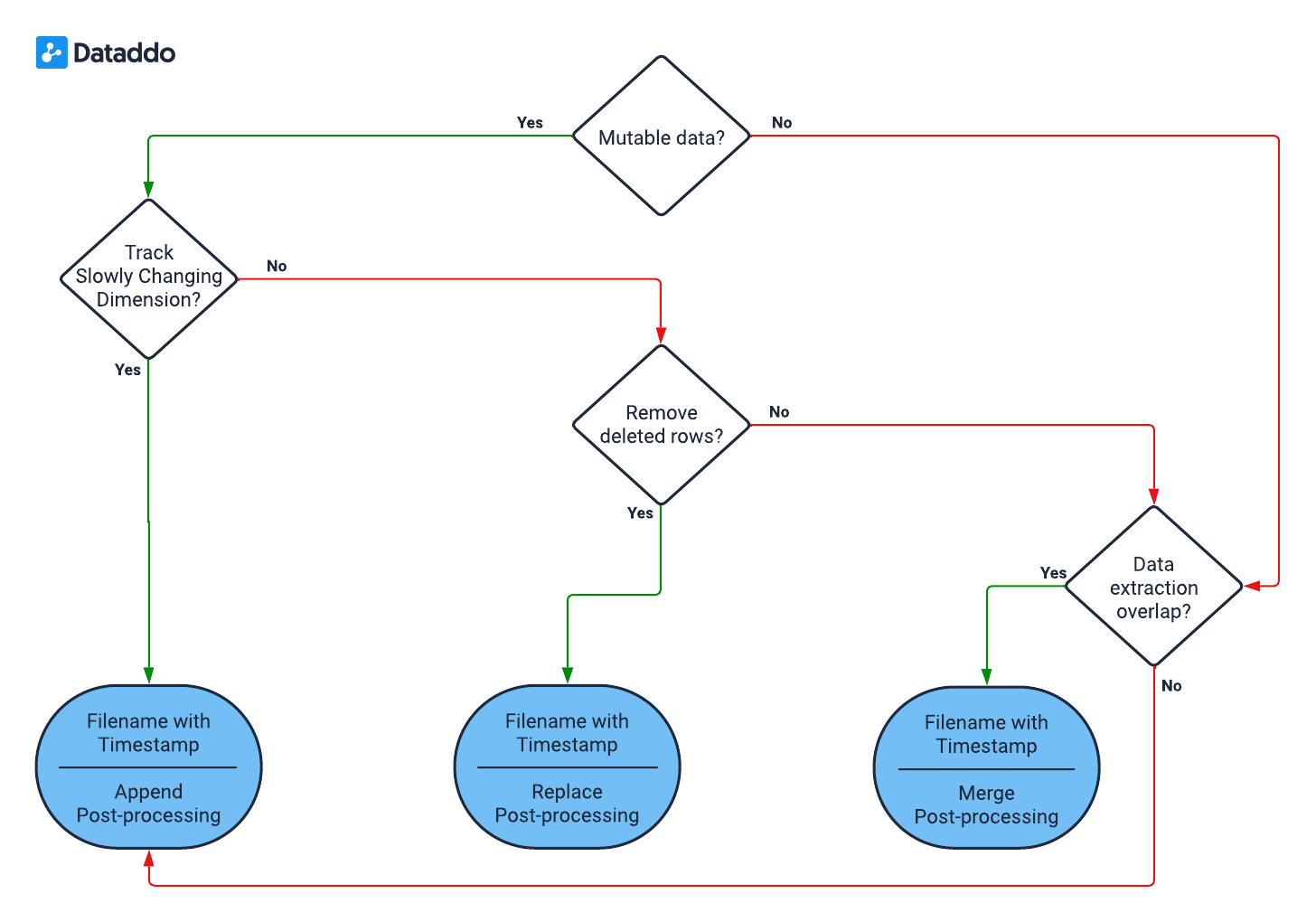

Please refer to the decision schema below, which is designed to help you better understand your data.

Is data mutable?

This question refers to whether the data, once extracted and defined by a common identifier, can change in subsequent extractions. In other words, it is asking if the data is subject to alteration after its initial extraction.

As an example, consider a row containing an invoice with a unique identifier, like an invoice number. If the status of the invoice changes from 'Pending' to 'Paid', would this update be captured in your next data extraction? If yes, then the data is mutable - it changes over time.

Need to Track Slowly Changing Dimensions (Changes Over Time)?

This question assesses if it's necessary to monitor and record the evolution of data values over time. In data warehousing, this concept is known as Slowly Changing Dimensions (SCD). It involves tracking changes (like updates or modifications) to data elements, keeping a historical record, and observing how these changes impact the system over time.

As an example, think about a product's price in a Product table. If the price changes, do you need to keep a record of the old price, or is it enough to just update to the new price? If keeping the historical price data is important for your analysis or business decisions, then you need to track Slowly Changing Dimensions.

Does deleted rows need removing?

This question determines if the data extraction process must account for rows that have been deleted in the source data. In other words, it's asking if the removal of records in the source must be reflected in the destination.

As an example, suppose a record is deleted from the source data. Must your data set or dashboard also reflect this deletion? If 'yes', then the deleted rows need removing.

Does Data Extraction Overlap Periods?

These questions get at the idea of whether your extraction process can include data from the same time period in multiple extractions.

As an example, consider your daily extraction process. Does each extraction include data from previous days? For instance, does Tuesday's extraction include data from Monday, and does Wednesday's extraction include data from Tuesday? If 'yes', your data extraction has overlapping periods. If each extraction only contains new data from that day, then there is no overlap.

Configurations

Append-Ready Timestamped Files

When you have mutable data and need to track slowly changing dimensions.

- Set Write Mode to Create New File or Replace if Exists. This ensures that Dataddo creates a new snapshot file during each data extraction process, preserving the previous state of the data and allowing for tracking of slowly changing dimensions.

- Set Filename to the option** that includes a timestamp**. This will eansure that each file loaded to your data lake will be appended with the actual timestamp.

- Set File Format either to CSV or Parquet.

- Parquet: An optimized columnar storage format ideal for analytics.

- CSV: A widely used format, simple and compatible with numerous tools.

- In your analytical tool that loads to data from dala lake, ensure that the loading mechanism is set up to append data. As each file is a time-based snapshot, appending ensures that the historical state of each data point is preserved and available for analysis.

Replace-Ready Files (Optionally Timestamped)

When handling mutable data and when tracking Slowly Changing Dimensions (SCD) isn't a priority, but the omission of deleted rows from subsequent analytics is, the "Replace-ready Timestamped File" configuration becomes especially valuable. This setup saves data with optional timestamps in the filenames, capturing the latest data state. Unlike configurations that retain all historical snapshots, this approach is geared to update or replace entire datasets based on the newest timestamp.

- Set Write Mode to Create New File or Replace if Exists. This ensures that Dataddo either creates a new snapshot file or replaces an existing one during each extraction process, reflecting the latest state of the data.

- Opt for either with or without a timestamp. This configuration will either create a new timestamped file or overwrite the existing one, influencing the post-processing steps in your analytical tool.

- Choose between CSV or Parquet.

- Parquet: An optimized columnar storage format ideal for analytics.

- CSV: A widely used format, simple and compatible with numerous tools.

- When loading data from your data lake, ensure you're retrieving the most recent file based on your naming convention or timestamp. If you're using timestamps, automate the loading procedure to recognize and focus on the latest timestamped file.

Merge-Ready Timestamped Files

When working with mutable data, there are situations where it's critical to retain the complete historical context while ensuring that newer data is integrated seamlessly. The "Merge-Ready Timestamped Files" configuration is designed for these scenarios. With this setup, data is systematically timestamped in filenames, ensuring each extraction creates a historical snapshot while newer files can be seamlessly merged with prior data.

- Set Write Mode to Create New File or Replace if Exists. This makes sure Dataddo produces a new timestamped snapshot file with every extraction, preserving the complete historical context.

- Set Filename to the option that includes a timestamp. This ensures each extraction results in a distinct snapshot, enabling straightforward merging of data over time.

- Choose between CSV or Parquet.

- Parquet: An optimized columnar storage format ideal for analytics.

- CSV: A widely used format, simple and compatible with numerous tools.

- When pulling data from your data lake, set up a process to sequentially merge timestamped files. Automate the merging mechanism in your analytical tool to recognize and integrate newer timestamped files with the existing dataset, ensuring a comprehensive data view that encompasses both historical and recent data.