Dataddo's Data Backfilling feature is ideal for database replication tasks. It facilitates the data transfer from more traditional database systems such as MySQL, PostgreSQL, or SQL Server to cloud-based data storage solutions like Snowflake, BigQuery, Redshift, or Databricks.

In case you need to backfill data from business apps or other cloud services, please refer to this article.

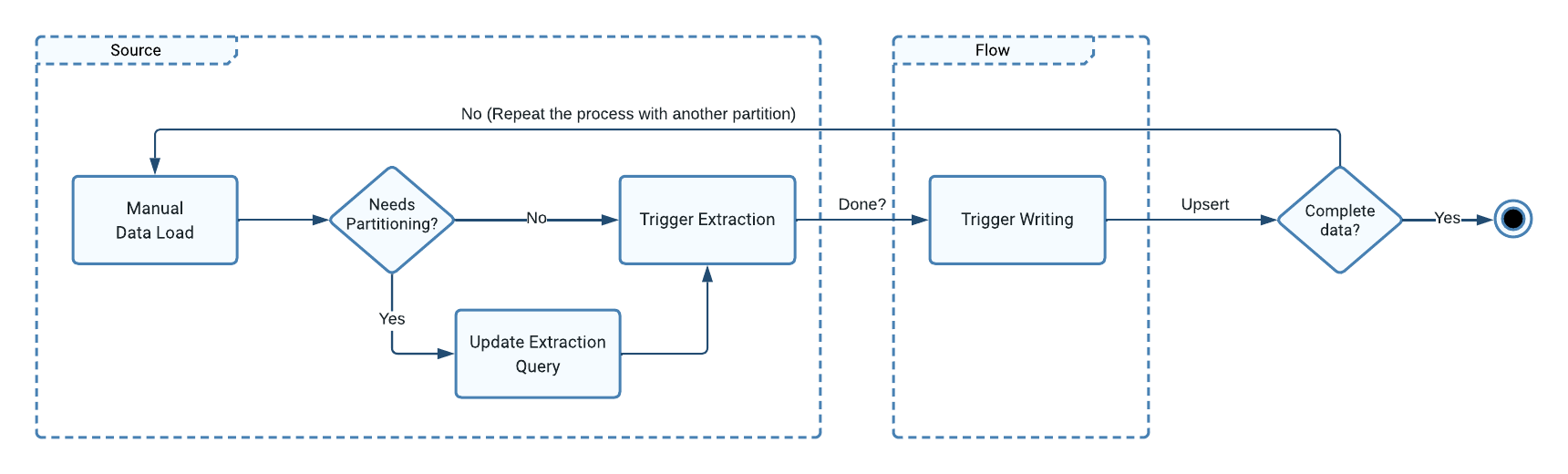

The Process

- Go to the Sources page and trigger the extraction for your selected source. If needed, specify the partition, e.g. using OFFSET and LIMIT options.

- Initiate the data writing in the relevant flow. For large data sets, you may need to use partitioning. The recommended maximum size of single partition is 5M rows.

Trigger the Extraction

- Go to the Sources page and click on your source.

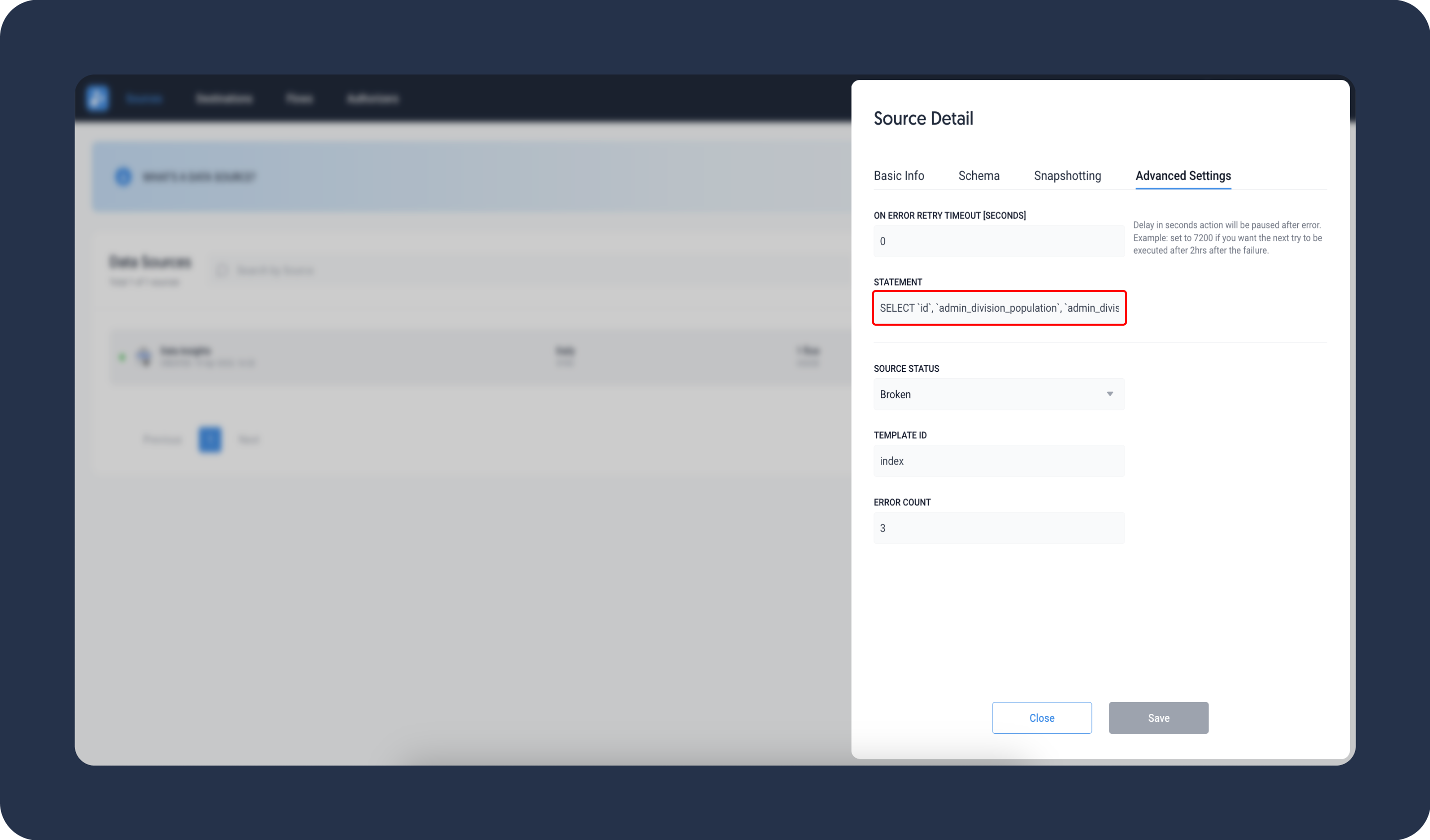

- Go to the Advanced Settings tab and edit the Statement field to temporarily update the extraction query (e.g. add partitioning clauses). For more information, see the section on updating the extraction query.

- Save your configuration.

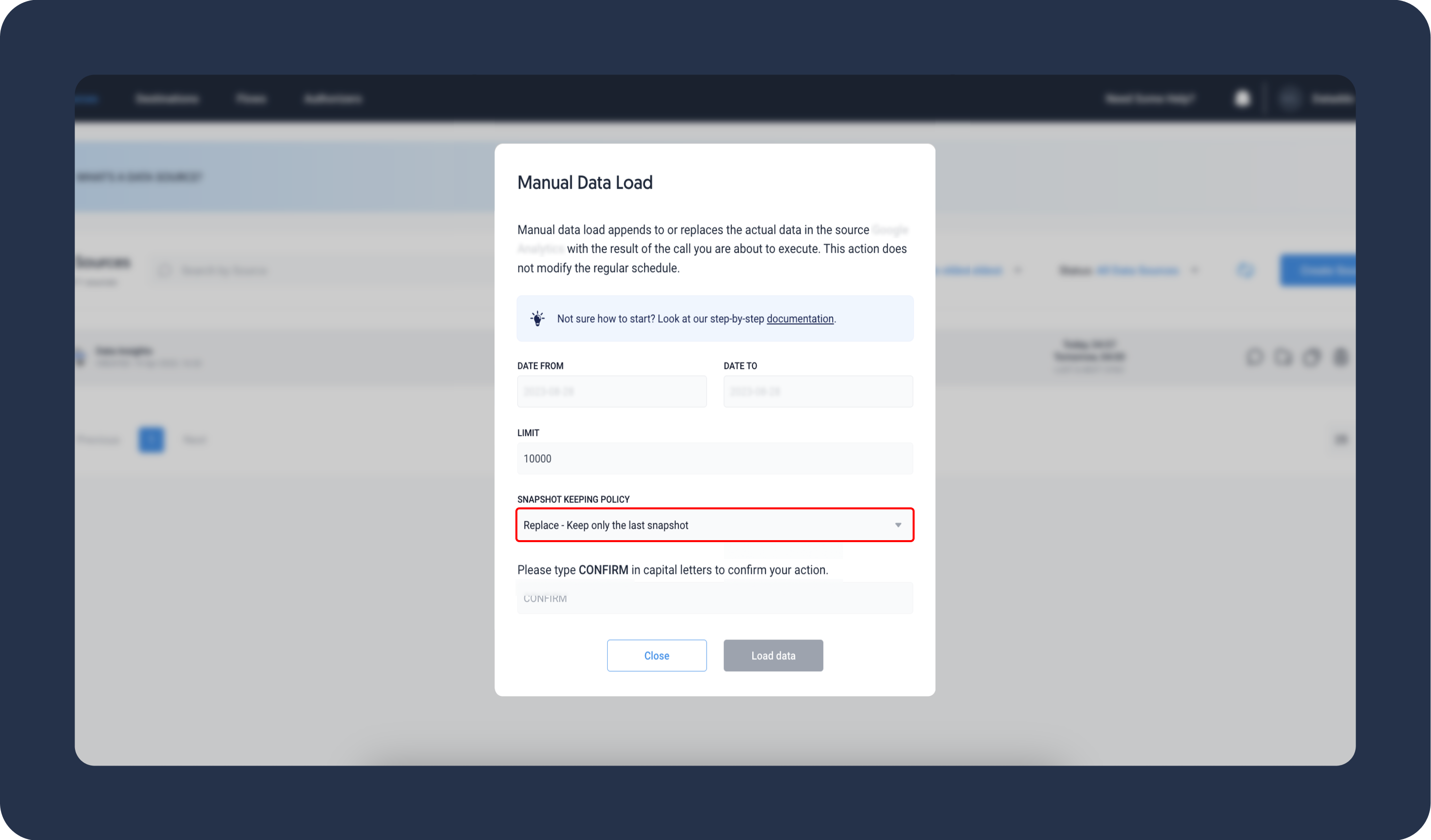

- Click on the Manual Data Load button next to your source and set the Snapshot Keeping Policy to Replace.

- Click on Load Data to initiate the extraction.

Update the Extraction Query

To partition an extensive data set, you'll need to modify the extraction query.

- Click on your source and navigate to the Advanced Settings tab.

- Locate the Statement field, which contains the SQL query responsible for data extraction.

- Add partitioning clauses to this query to break down the data extraction into manageable chunks. For example, you can use SQL syntax like LIMIT and OFFSET as shown below to extract the first 5 million rows from a table.

- Save your configuration.

SELECT * FROM mytable LIMIT 0, 5000000

Trigger Data Writing to the Storage

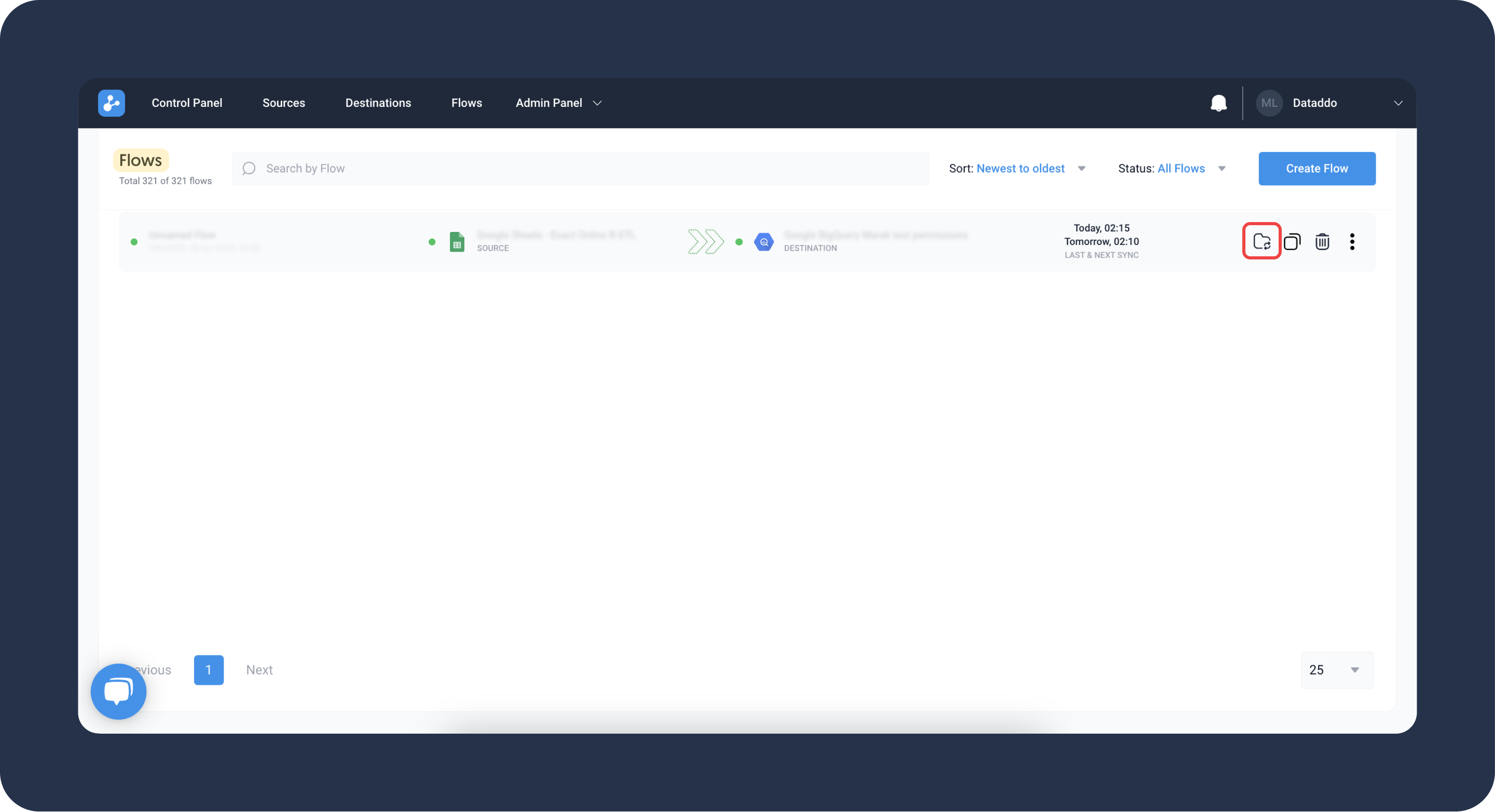

Before starting this operation, please check the write mode of your flow. We recommend using the upsert write mode, which either adds new records or updates existing ones, reducing the risk of duplicate entries.

- Go to the Flows page and click on the Manual Data Insert button next to your flow.

- Confirm the data insert and click on Insert Data.

- Confirm the operation was successful by checking logs or inspecting the warehouse directly.

Troubleshooting

Context Deadline Exceeded Error

rpc error: code = DeadlineExceeded desc = context deadline exceeded

This issue may be caused by extracting too many rows. Please update your extraction query to smaller partitions. The recommended maximum partition size is 5M rows.

Cannot Change Write Mode to Upsert in Existing Flows

Changing to the upsert write mode for an existing flow is not possible for some specific destinations like MySQL. In these cases, Dataddo creates indexes to ensure smooth system operations and as such, write mode chages to existing flows are restricted.

Use one of the following workarounds:

- If you can lose the data in the existing table without consequences or recover it through backfilling:

- Re-create the flow using the upsert write mode to ensure that the table and indexes are correctly established.

- If preserving the data is crucial:

- Delete the existing flow.

- Add a unique key index on the columns you wish to include in the composite key. For example, using the SQL query below.

- Create a new flow with upsert as the write mode and a composite key that incorporates the column on which you created the unique index.

ALTER TABLE table_name

ADD UNIQUE INDEX unique_index_name (column1, column2);