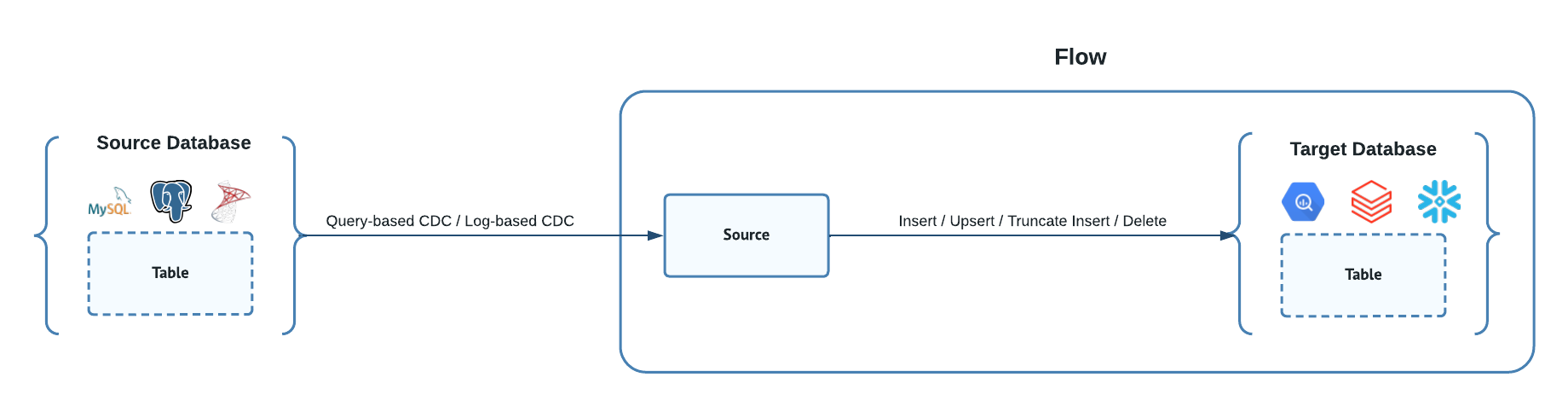

Database replication is vital for ensuring data accessibility and consistency across platforms. While many focus on same-technology replication like MySQL to MySQL, Dataddo excels in cross-platform replication, facilitating smooth data transfers from platforms like MySQL to BigQuery. This approach streamlines the automation of sources, flows, and tables creation, making your data readily available and harmonized for analysis.

In Dataddo, replication is designed on a table-by-table basis. This means that each table you wish to replicate necessitates its own unique flow. While this offers granularity, it could seem daunting when considering a whole database with multiple tables. To streamline this, Dataddo features an embedded tool that automates this deployment process. With a few configurations, you can efficiently roll out replication across an entire database, as the tool automatically generates flows for each table, saving you both time and effort.

Understanding your Data

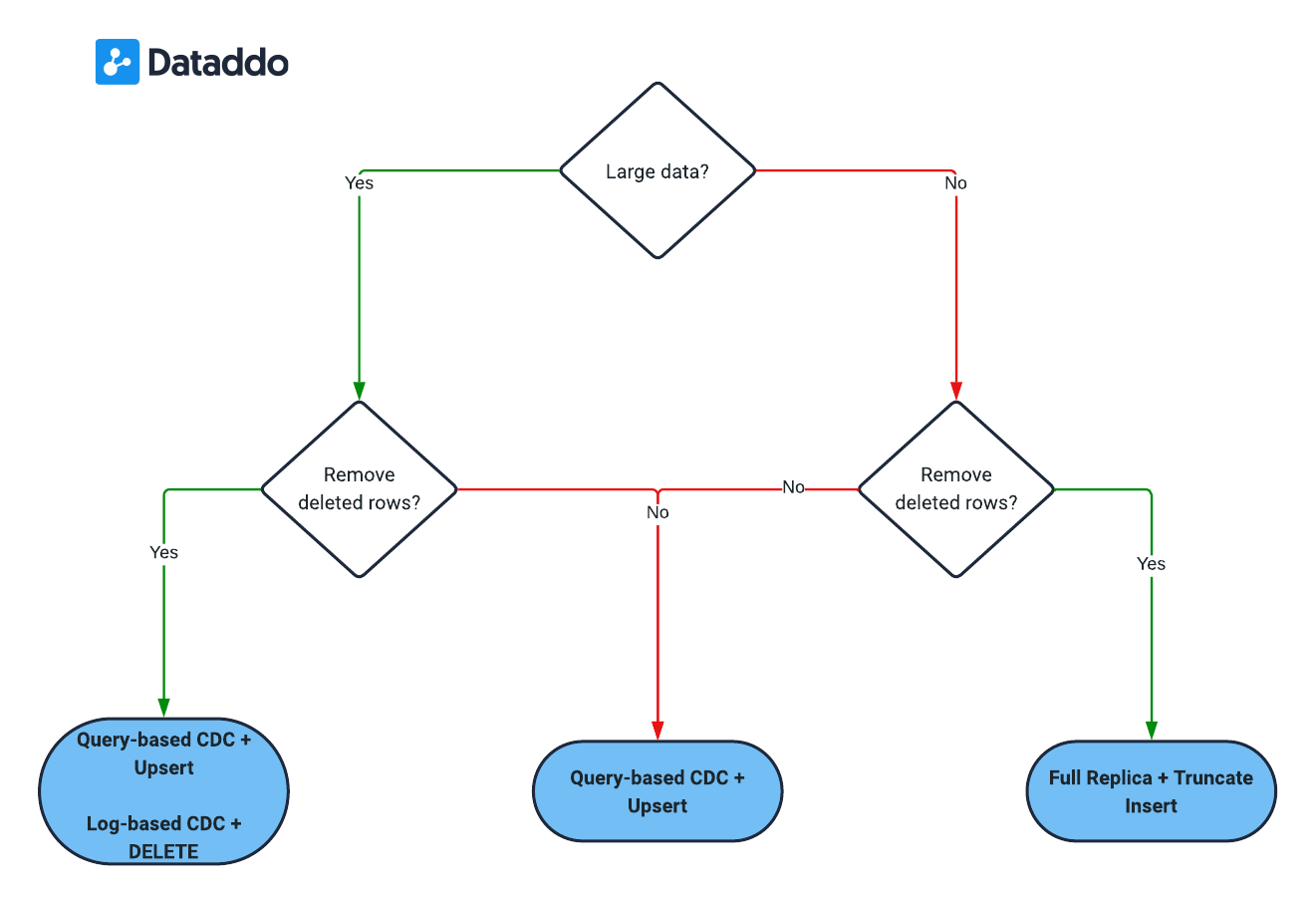

Before diving into the specifics of data configuration, it's vital to have a solid understanding of your data. This means recognizing its unique properties, how it changes over time, and how these changes should be reflected in your analysis.

Please refer to the decision schema below, which is designed to help you better understand your data.

Is data large?

For the purposes of this configuration, data is considered 'large' if a table contains more than roughly 150K rows.

Do deleted rows need removing?

One critical consideration in database replication is determining how to manage rows that have been deleted from the source. Specifically, should the data extraction process account for these deletions?

As an example, if a record is removed from the source table, should the corresponding entry in the destination table be deleted as well? If the answer is 'yes', then it's imperative to ensure the replication process handles such deletions accurately.

Change Data Capture (CDC) Capabilities in Dataddo

CDC, or Change Data Capture, is a technique in database replication that detects and captures changes to data. By focusing only on recent alterations, CDC optimizes data transfers, facilitating timely synchronization and consistent data across systems. In Dataddo you can utilize either Query-based CDC or Log-based CDC

Log-based CDC

Log-based CDC captures changes by monitoring a database's transaction logs. These logs record all data modifications, such as inserts, updates, and deletes. Using these logs, only the latest changes are replicated to the target system, ensuring efficiency and near real-time synchronization. This method is ideal for databases with frequent data changes.

Query-based CDC

Query-based CDC identifies changes by routinely querying the source database. It often uses timestamp columns or versioning fields to pinpoint new or updated records. Unlike log-based CDC, this method doesn't rely on transaction logs but needs specific columns to track record changes. It's simpler to set up in some databases but might be less efficient for high-transaction-rate databases due to potential performance impacts from frequent queries.

Configurations

Full Replica & Truncate Insert

This configuration is tailored for scenarios where a user has a relatively low volume of data in the source table and wants to ensure that deleted rows from the source are also removed in the target table.

- Connector Selection. Start by choosing a standard query-based CDC connector that supports the use of SELECT queries. Suitable connectors for this purpose include MySQL, Postgres, or SQL Server.

- Creating a Source. Through the UI, specify all the columns you wish to gather periodically. If necessary, you can fine-tune the SELECT query for more specific data extraction. This setup will automatically pull all the data available based on the specified query.

- Flow Configuration. While setting up the flow, ensure you select truncate insert as your write mode. This mode ensures that every time data is replicated, the target table is first cleared (or truncated) and then freshly populated with the data from the source, effectively mirroring any deletions from the source.

Query-based CDC & Upsert

This configuration caters to scenarios where there's an extensive amount of data in the source table, but the removal of deleted rows in the destination table isn't a requirement.

- Connector Selection. Start by choosing a standard query-based CDC connector that supports the use of SELECT queries. Suitable connectors for this purpose include MySQL, Postgres, or SQL Server. All such connectors are labelled as "Query-based CDC".

- Query Specification. During the connector configuration, you'll need to define a SELECT query that will pinpoint only the altered data. It's vital to scrutinize the table's structure at this stage to ensure the presence of a column (e.g., updated_at) that can assist in capturing changes. Please refer the the example query below.

- Flow Configuration. After setting up the connector, you'll need to establish a Flow using the Upsert write mode. This mode facilitates the insertion of new records and the updating of existing records based on a unique identifier. It's essential to choose a composite key that mirrors the unique identity of the rows. Ideally, this should correspond to the primary key of the source table.

SELECT * FROM your_table_name

WHERE updated_at >= NOW() - INTERVAL 3 DAY;

Query-based CDC & Upsert with Log-based CDC & Delete

By leveraging both Query-based CDC for upserts and Log-based CDC for deletions, you achieve comprehensive replication. This dual approach necessitates setting up two distinct flows: one to capture inserted and updated rows, and another for deleted rows. For guidance on the Query-based CDC & Upsert setup, please refer to the previous section. To set flow handling deletions, please follow these steps:

- Connector Selection. Choose a suitable log-based CDC connector according to your database type, such as MySQL Binlog or Postgres WAL. These connectors are designed to track changes directly from database logs, capturing all modifications including deletions. All such connectors are labelled as "Log-based CDC".

- Configuration of the Connector. While setting up the connector, ensure that you select the primary key of the source table, which will ideally be used to identify deleted rows. This key should offer a unique identifier for each record in your table.

- Flow Configuration. After setting up the connector, you'll need to establish a Flow with the Delete write mode. During this setup, make sure to use the primary key (which you've identified for deleted rows) as the Composite key. By doing this, any records that have been deleted from the source table will also be removed in the destination table, maintaining consistency between both locations.

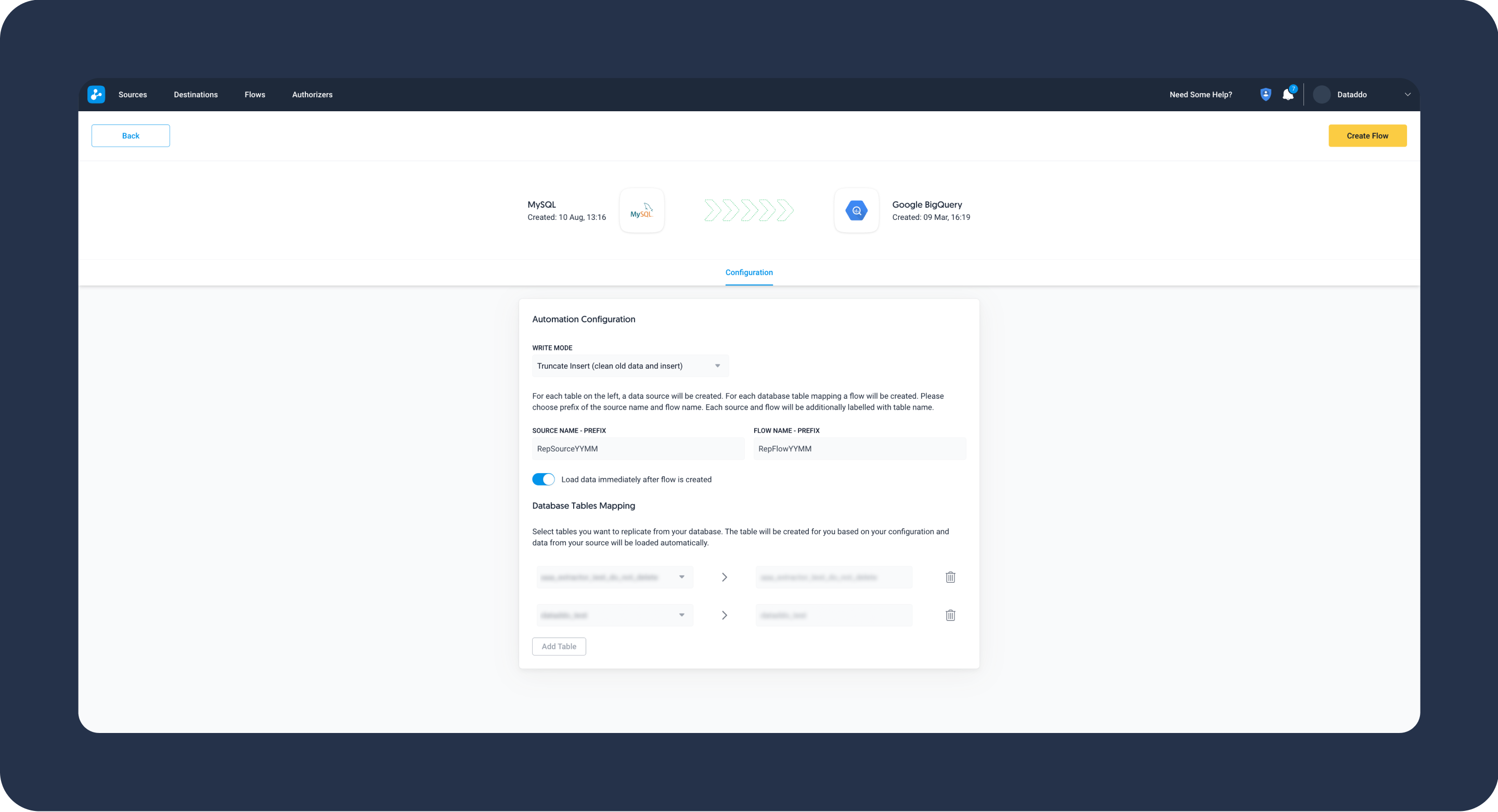

Automate Flow Provisioning for Database Replication

- Under Settings, go to the Automations section and click on Try Out.

- Connect the database you would like to replicate.

- Connect the database you want to send data to.

- Set write mode to truncate insert. If a different write mode is required, please edit the flow once it's provisioned.

- A new source and a flow will be automatically created for each of your tables. Choose a prefix which will be added to your newly created sources and flows.

- Select which tables you would like to replicate.

- Click on Create Flow.

When using automatic provisioning for database replication, the default configuration of Full Replica & Truncate Insert is applied. If you require a different configuration, please adjust the settings after the flows have been provisioned.

Limitations

Empty Tables

Please keep in mind that you cannot replicate empty tables. If your table is empty, table creation will return an error.