For data flow extraction logs, see below.

Source Extraction Logs

No Action Required

| Error Message | Meaning |

|---|---|

| Extraction enqueued | Your extraction has been enqueued and it will be processed shortly. No action needed. |

| Extraction successfully finished | Your extraction was successful, no errors were found. |

Reauthorize Your Account

| Error Message | Solution |

|---|---|

| Client error: 'POST https://accounts.google.com/o/oauth2/token' resulted in a '400 Bad Request' response: { "error": "invalid_grant", "error_description": "Token has been expired or revoked." } | Your account needs to be reauthorized. Navigate to Authorizers and reauthorize your source. |

| Error unauthorized_client - refresh_token is invalid | Your account is unauthorized. Navigate to Authorizers and add your service. |

No Data Retrieved

| Error Message | Solution |

|---|---|

| All requests to target systems failed | When trying to extract your data, all requests failed. For more information on what went wrong, you can test your extraction. Click on the three dots on your source and select Test Extraction. |

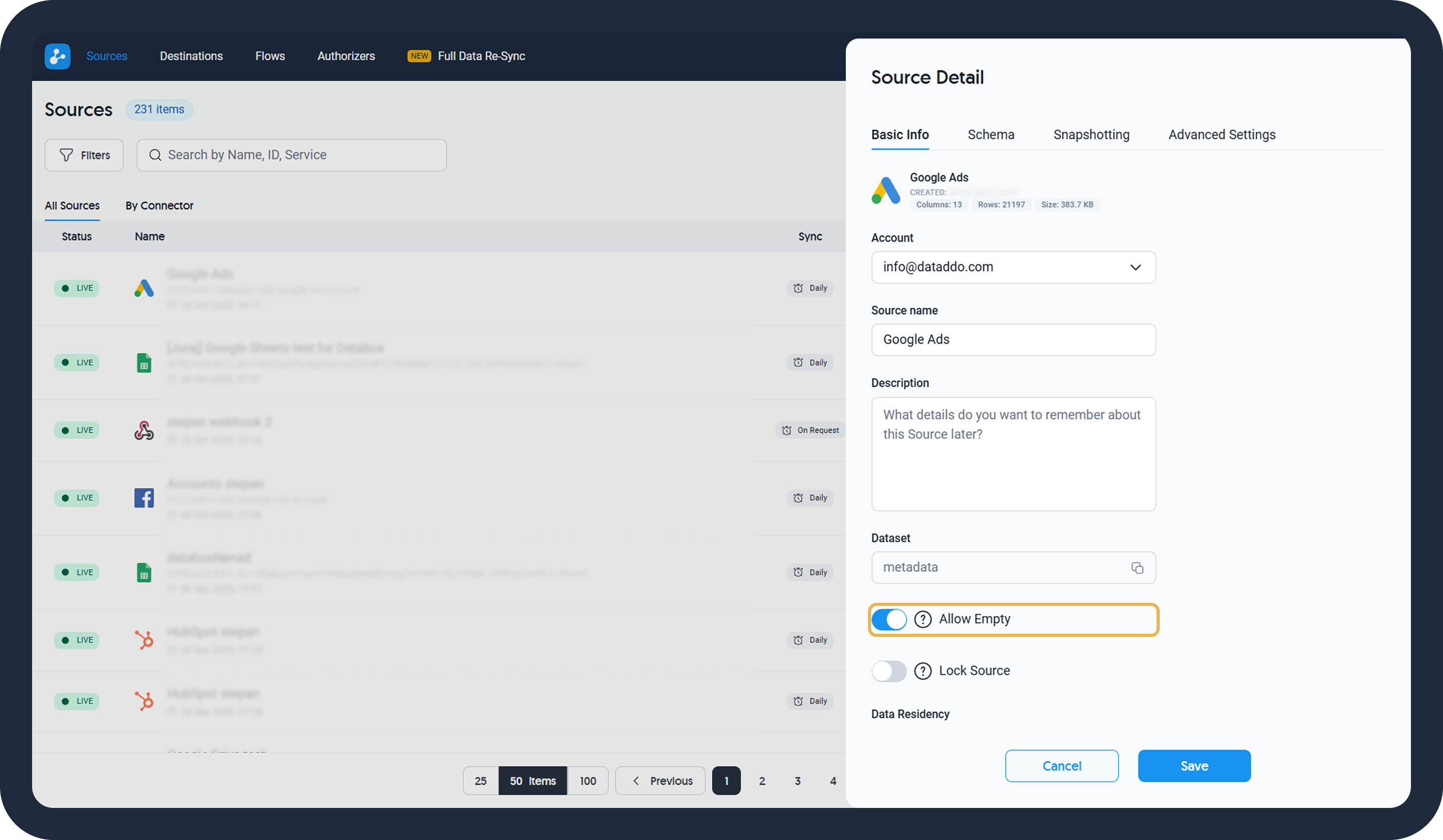

| No data retrieved. Enable extraction's 'allow empty' rule if you accept empty datasets | No data was found when extracting data. In case this is expected behavior (e.g. you don’t have orders everyday or don’t run ads daily), you can enable the “allow empty” function during the data source creation. For an existing data source, click on it and scroll down on the Basic Info tab to enable the function.  |

Reschedule Synchronization Time

| Error Message | Solution |

|---|---|

| Another extraction with exactly same parameter was executed in parallel with this one. Please reschedule your conflicting extractions. | When configuring multiple data sources with identical configurations, ensure there is at least a 15-minute difference between each sync to avoid potential conflicts or performance issues. |

Change Source Schema

| Error Message | Solution |

|---|---|

| Failed to save data to storage: Failed to update source data: Server error: 'PUT https://storage.prod.dataddo.com/v1.0/append' resulted in a '500 Internal Server Error' response:\n{"status":"Internal Server Error","error":"replace: Failed to cast values of column 'column_A' (type:integer, id:XXXXXX): failed to cast string value '--' to integer"}\n\n | You will need to change the data type of column named column_A. In this case we will need to change the type from integer to string since we are received string values. For more information, refer to the article on data types. |

Flow Extraction Logs

No Action Required

| Error Message | Meaning |

|---|---|

| Job enqueued | Your extraction has been enqueued and it will be processed shortly. No action needed. |

| [Number] rows written | [Number] of rows was successfully loaded into the data destination. |

Reauthorize Your Account

| Error Message | Solution |

|---|---|

| Erroneous communication with Google Big Query API. | Navigate to Authorizers and reauthorize Google BigQuery. |

| Failed to enqueue write job. | Writing your data to the data destination failed. Navigate to Authorizers and reauthorize your destination. |

| Client error: 'POST https://accounts.google.com/o/oauth2/token' resulted in a '400 Bad Request' response: { "error": "invalid_grant", "error_description": "Bad Request" } | Your authentication expired or is invalid. Navigate to Authorizers and reauthorize your data destination. |

| Stream transfer: write data from stream: ZOHO write: upload file to Zoho: destination response URL: https://www.zohoapis.eu/crm/v3/org: status_code: 403 response: {"code":"CRMPLUS_TRIAL_EXPIRED","details":{},"message":"CRM plus trial expired","status":"error"} | Your CRM plus trial has expired, please upgrade your ZOHO CRM account. |

Change Table Schema

| Error Message | Solution |

|---|---|

| Json unmarshal: Failed to convert value GBQ data type: Column 'gadate' schema is set to 'TIMESTAMP' and thus the 'integer' type values cannot be inserted to it | Affected column data type is incompatible with the data type in your data destination. Do one of the following:

date.2. Change the data type in your data destination to integer. |

| Stream transfer: write data from stream: [Unknown][][] Erroneous communication with Google Big Query API: failed to prepare table: Failed to create new table '407589665707:data_writer_test.blended_sources_test': googleapi: Error 400: Field column_A already exists in schema, invalid | Field column_A already exists in schema. Click on your data source and rename your field in the Schema tab. |

| "error": "reading current structure: verifying table name: there is more than one table matching "facebook_ads": [company_production.facebook_ads company_staging.facebook_ads]" | There are two tables with the same name (facebook_ads) in your storage. You can do one of the following:

2. Remove one of the facebook_ads tables.3. Use the full path in the flow configuration. For example, instead of facebook_ads, use company_production.facebook_ads. |

| Table does not contain 'app_clicks' column | The issue arises due to a schema mismatch between the data source and data destination tables. Specifically, the app_clicks column is missing in the data destination table. Additionally, double-check that the data flow directs information to the correct destination table. Each flow should be set up to send data to a different table. |

Connector Specific Logs

| Error Message | Solution |

|---|---|

| Stream transfer: write data from stream: writing to sheet: Failed to write data to sheet by columns: Failed to write "USER_ENTERED" data batch to sheet: googleapi: Error 400: Invalid data[0]: This action would increase the number of cells in the workbook above the limit of 10000000 cells., badRequest | Your Google Sheets limit has been reached. Please select another Sheet as a data destination. |