Azure Blob Storage is a cloud-based object storage service provided by Microsoft Azure. It allows users to store and manage unstructured data, such as documents, images, videos, and backups, in a highly scalable and secure manner, offering high durability, accessibility, and security and making it ideal for a wide range of applications and scenarios in the cloud.

Prerequisites

- You have already created a Storage Account and within that you have at least one Container.

- You have allowed incoming connection to your server from Dataddo IPs.

- You have generated a Shared Access Token to authorize the connection to your storage.

Authorize Connection to Azure Blob Storage

In Azure Portal

Add a Storage Account

- In Azure Portal in the Storage Accounts section, click on Create.

- Fill in Storage account name. You will need to provide this value during configuration in Dataddo.

- Use the default configuration. In case you want to fine-tune it, make sure following configuration is set:

- In the Advanced tab: allow both Enable storage account key access and Allow enabling public access on individual containers.

- Make sure that in the Networking tab, Enable public access from all networks is selected.

- Review and create the Storage Account.

Add a Container in Storage Account

- In Azure Portal's Storage Accounts section, select the storage account you want to use, go to Containers and Add new container

- Set the name of the container. You will need to provide this value during configuration in Dataddo.

- Use the default Private (no anonymous access) access configuration.

Generate Shared Access Token

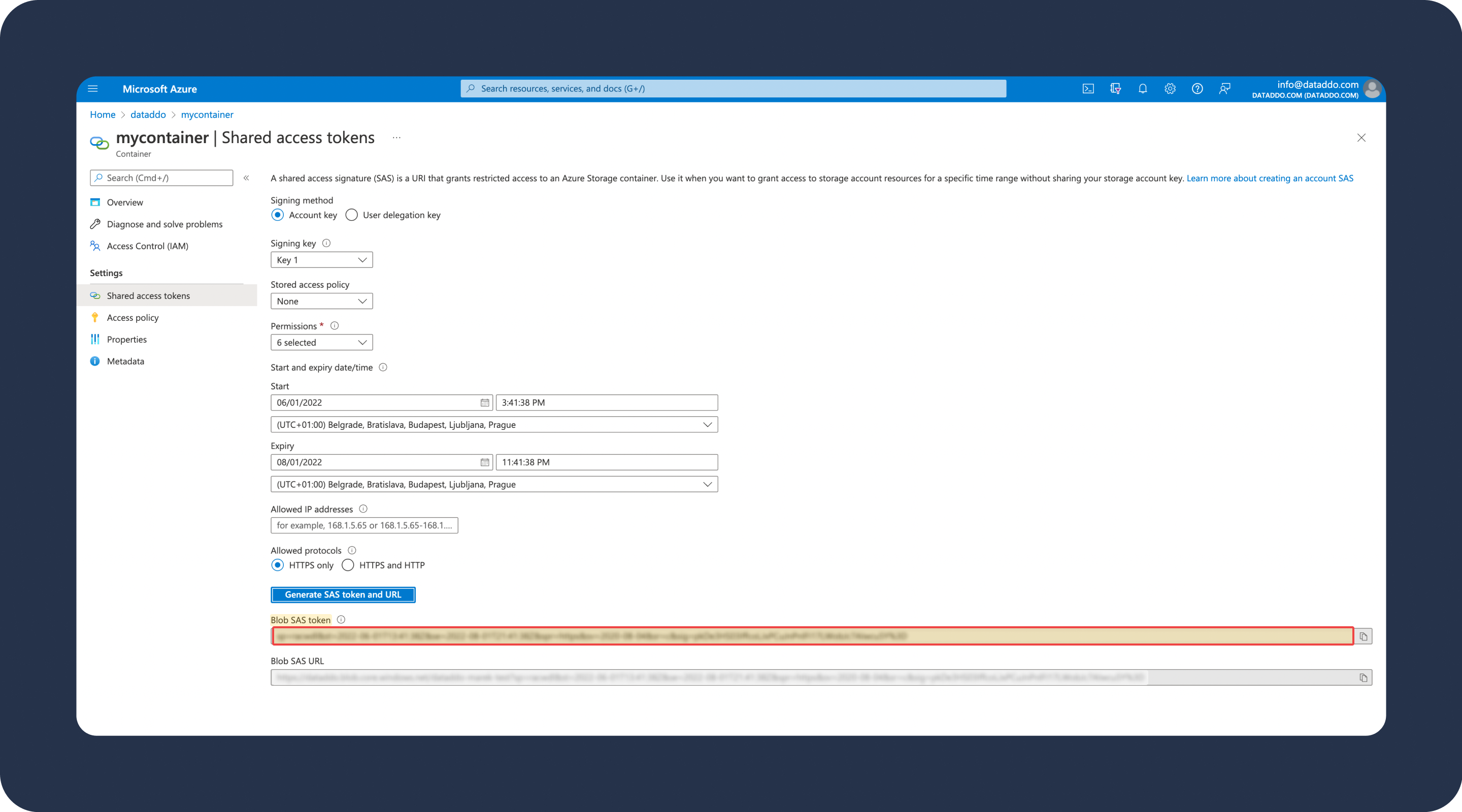

- In the Storage Account section, select Container you want to use and under Settings click on Shared access tokens.

- Set Permissions to

Read,Add,Create,Write,Delete,List, andMove. - Set Expiry to value to comply with your security policies. We recommend to set it on at least 6 months (after the token expiration you will need to reauthorize the connection).

- Set Allowed IP addresses to empty value. We recommend this because you can only set IP address range and not list multiple IP addresses. The connection is secured via unique token so there are no security implications.

- Set Allowed protocols to HTTPS only.

- Click on Generate and make sure to copy to copy the value of Blob SAS Token. You will need to provide this value during configuration in Dataddo.

In Dataddo

- In the Authorizers tab, click on Authorize New Service and select Azure Blob Storage.

- You will be asked to fill the following fields

- Storage Account. Provide the name of the storage account you want to use.

- Container. Provide the name of the container you want to use for reading or writing the data.

- Shared Access Token. Provide the value of the shared access token.

- After the account configuration you will be redirected back to Destination page where you will need to fill the Path. Use name of the folder in your Container + slash (e.g.

database/). - Click on Save.

Create a New Azure Blob Storage Destination

- On the Destinations page, click on the Create Destination button and select the destination from the list.

- Select your authorizer from the drop-down menu.

- Name your destination and click on Save.

Click on Add new Account in drop-down menu during authorizer selection and follow the on-screen prompts. You can also go to the Authorizers tab and click on Add New Service.

Create a Flow to Azure Blob Storage

- On the Destinations page, click on the Create Destination button and select the destination from the list.

- Select your authorizer from the drop-down menu.

- Name your destination and click on Save.

Click on Add new Account in drop-down menu during authorizer selection and follow the on-screen prompts. You can also go to the Authorizers tab and click on Add New Service.

File Partitioning

File partitioning splits large datasets into smaller, manageable partitions, based on criteria like date. This technique enhances data organization, query performance, and management by grouping subsets of data with shared attributes.

During flow creation, you can:

- Select one of the predefined file name patterns.

- Define your own custom name to suit your partitioning needs.

Example of a custom file name

When creating a custom file name, use variations of the offered file names.

For example, use a base file name and add a different date range pattern:

xyz_{{1d1|Ymd}}

Using this file name, Dataddo will create a new file named xyz every day, e.g. xyz_20xx0101, xyz_20xx0102 etc.

For more information, see Dynamic File Naming Patterns.

Troubleshooting

Server Failed to Authenticate the Request Error

stream transfer: write data from stream: write: uploading data to Azure Blob Storage: [0][AuthenticationFailed] Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature

This error can be caused due to the following reasons:

- Invalid Shared Access Token

- Expired Shared Access Token

- Incorrect IP address restriction of Shared Access Token

- Invalid Container

Invalid Shared Access Token Error

- Regenerate your Shared Access Token.

- Go to the Authorizers tab and click on your Azure Blob Storage authorizer.

- Insert the value of Blob SAS Token to the shared access token field and save the changes.

Expired Shared Access Token Error

- Regenerate your Shared Access Token and make sure that the Expiry date of the token is long enough (we recommend at least 6 months).

- Go to the Authorizers tab and click on your Azure Blob Storage authorizer.

- Insert the value of Blob SAS Token to the shared access token field and save the changes.

Incorrect IP Address Restriction of Shared Access Token Error

- Regenerate your Shared Access Token and make sure that Allowed IP addresses field is empty.

- Go to the Authorizers tab and click on your Azure Blob Storage authorizer.

- Insert the value of Blob SAS Token to the shared access token field and save the changes.

Invalid Container Error

- Go to the Authorizers tab and click on your Azure Blob Storage authorizer.

- Make sure that the container name is correct.

No Such Host Error

ERROR CODE

stream transfer: write data from stream: write: sending data: Put "https://address.com": dial tcp: lookup something.blob.core.windows.net on 0.0.0.0: no such host

This error is caused by incorrect storage account.

- Go to the Authorizers tab and click on your Azure Blob Storage authorizer.

- Make sure that the storage account is correct.